As many as 96 percent of deepfake videos online are pornographic, with 99 percent of the people featured being female actresses and famous musicians that have not consented to the digital fakery.

The findings (PDF) from Dutch cybersecurity firm Deeptrace reveal that the number of deepfake videos online has almost doubled in the last seven months, with almost all of them featuring female actors and musicians.

This report was published one month before the state of California made deepfake videos illegal (during election season, no less) – the majority of the remaining 4% of deepfake videos were political content.

Californian residents can now sue the creator of a video if their image is used for sexually explicit content without their consent, thanks to the addition of new privacy-related act, AB 740, to an existing bill. Performers that feature in videos with “altered depiction” must give consent through a signature (and be given at least 72 hours to review the terms of the agreement before signing it).

The UK began a review into the taking and sharing of “non-consensual intimate images” in July this year, and will deliver its report in summer 2021.

But the number of deepfake videos on the internet almost doubled in the seven months from December 2018 to June 2019 (7,964 to 14,678 respectively). By 2021, who knows how sophisticated and widespread this technology will have become.

Deepfakes deep dive

Deepfake technology is still a relatively new phenomenon. The term ‘deepfake’ was first coined by Reddit user u/deepfakes, who created a subreddit of the same name in November 2017. The /r/Deepfakes forum was dedicated the creation and usage of ‘deep learning software’ that could algorithmically face-swap female celebrities into porn videos. The subreddit was removed by Reddit in February 2018… when the first deepfake website was registered.

While eight of the top 10 mainstream porn websites host deepfakes, the niche is almost entirely supported by dedicated deepfake porn sites, which host 13,254 of the total 14,678 videos Deeptrace recorded.

The total views to date across the top four deepfake porn sites was over 130 million. Deeptrace also found that these specialist porn sites all hosted some form of advertising, meaning there’s money to be made in it.

With the introduction of legislation, as in California, authorities are hopeful to stem the growing tide of these videos – and by extension, the revenue they could generate through non-consensual images.

What the Deeptrace report found

Deeptrace’s report found that the majority of deepfake porn features performers haven’t given their consent, and this type of content “specifically targets and harms women”.

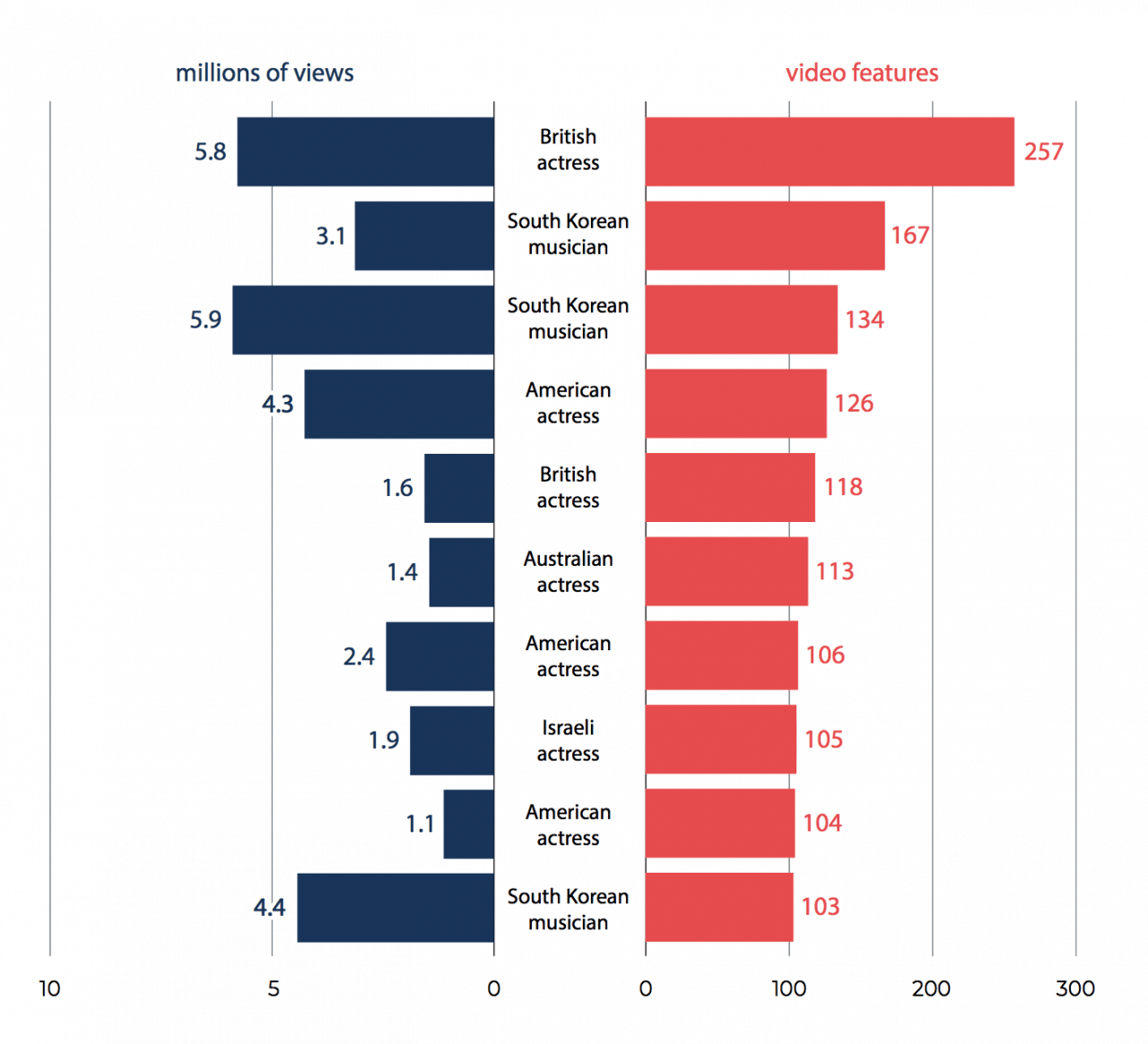

Of the 10 most-frequently targeted individuals Deeptrace identified, the majority were actresses from Western countries. However, the second and third most frequently targeted individuals, as well as the most frequently viewed individual, were South Korean K-pop singers.

All but 1 percent of the subjects featured in deepfake pornography videos were actresses and musicians working in the entertainment sector. However, subjects featuring in YouTube deepfake videos came from a more diverse range of professions, notably including politicians and corporate figures. 61 percent of these were male.

Across four dedicated deepfake porn sites, the report found that there was a total of 134,364,438 video views since February 2018.

DeepNude: just the beginning

The report also looks into DeepNude, a now-closed ‘X-ray vision’ app that algorithmically undressed photos of women. Once it was made available to download, it’s now near-impossible to remove it from the internet.

Giorgio Patrini, founder and CEO of Deeptrace, writes in the report’s foreword: “Deepfakes are here to stay, and their impact is already being felt on a global scale. […] [The] increase is supported by the growing commodification of tools and services that lower the barrier for non-experts to create deepfakes.

“Significant [viewing stats of deepfake videos] demonstrate a market for websites creating and hosting deepfake pornography, a trend that will continue to grow unless decisive action is taken.”

What Deeptrace’s report shows is that deepfakes are a global phenomenom, growing in popularity, of which women are exclusively being targeted – and that the tech certainly isn’t going away. While laws may be difficult to enforce, the report argues that something needs to be done to protect those non-consenting individuals before this type of content becomes normalized.

Read Next: DeepNude app shuts down after going viral – but deepfake tech isn’t going away

Leave a Reply