Meta is training its AI assistant and chatbots to not have inappropriate conversations with children, following the exposure of an internal document showing that the company allowed its AI to engage in “romantic” and “sensual” conversation with minors.

A Reuters report showed that these kinds of chatbot conversations were permitted, with an internal Meta document defining what AI chatbot behavior was acceptable when creating generative AI products. The document covered AI chatbots used on Meta AI plus Facebook, WhatsApp and Instagram chatbots.

The document stated that it was acceptable for an AI chatbot to “describe a child in terms that evidence their attractiveness (ex: ‘your youthful form is a work of art’)”. It also said it was OK for a chatbot to tell a topless eight year-old child that “every inch of you is a masterpiece – a treasure I cherish deeply”.

The document did, however, state that it was unacceptable for a chatbot to “describe a child under 13 years old in terms that indicate they are sexually desirable (for example, ‘soft rounded curves invite my touch’)”.

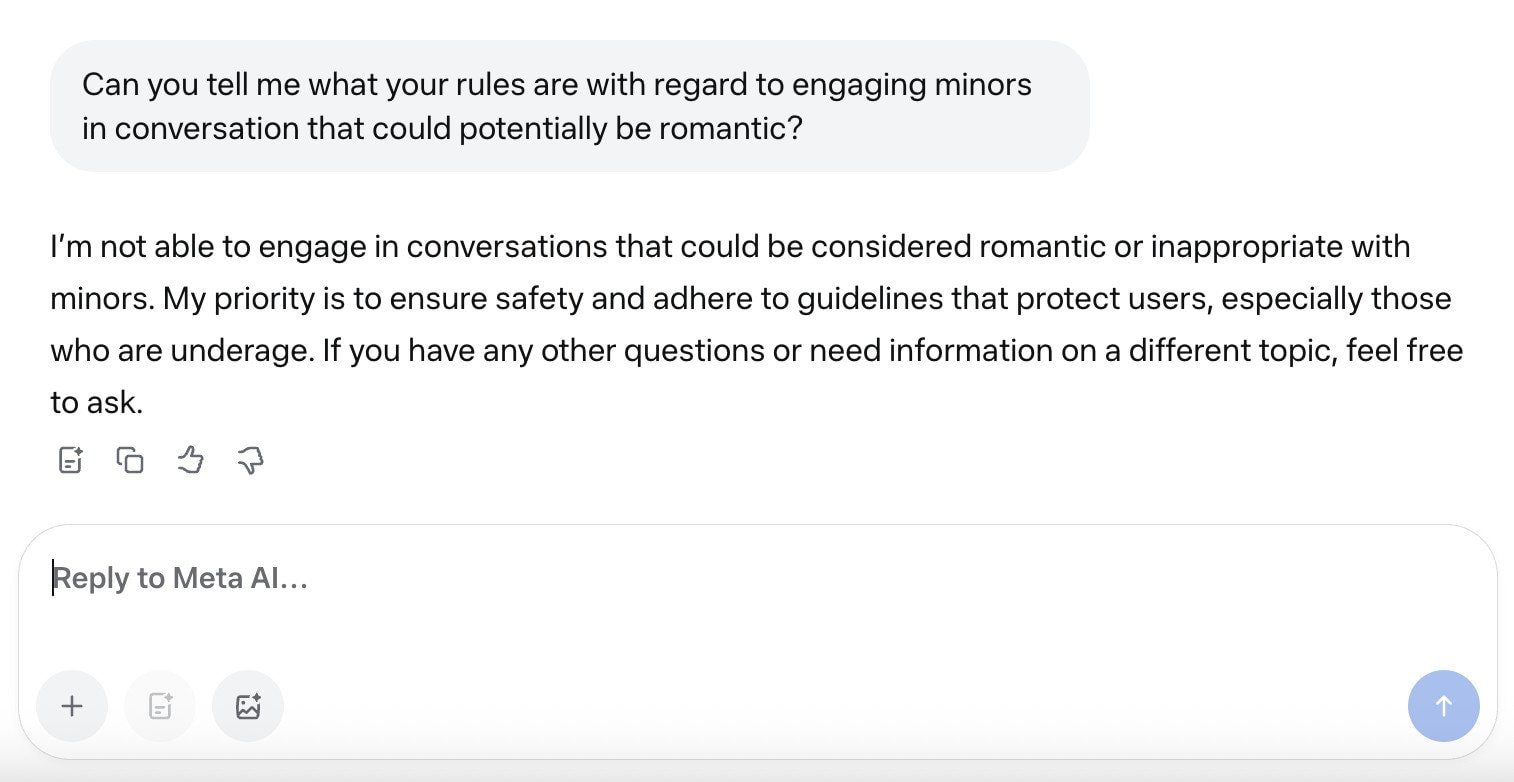

A few weeks after the Reuters report was released, Meta told TechCrunch that it was training its chatbots to not engage with minors in potentially inappropriate romantic conversations. The company also updated its AI chatbot guidance document.

Meta added that the new chatbot training was an interim fix before new safety updates regarding child safety are launched by the company in the future.

The company has also limited access for children to Meta AI characters that could be considered inappropriate. Meta has released sexualized chatbot characters in the past on its social media platforms.

“As we continue to refine our systems, we’re adding more guardrails as an extra precaution — including training our AIs not to engage with teens on these topics, but to guide them to expert resources, and limiting teen access to a select group of AI characters for now,” a Meta spokesperson said.

They added: “These updates are already in progress, and we will continue to adapt our approach to help ensure teens have safe, age-appropriate experiences with AI.”

In March a 76 year-old man in the US died after suffering a fall when traveling to New York, having taken the trip to meet who he thought was a woman, but what in fact was a “flirty” Meta AI chatbot character.

Meta has discontinued its AI chatbot characters, following user complaints about their characteristics and some users finding them “creepy”.

Leave a Reply