In 2023, AI regulation discussions have commanded the global stage, primarily centering on the most sinister potential threats brought about by the technology’s rapid advancement. Concerns range from catastrophic scenarios such as Skynet-esque nuclear disaster, the generation of deceptive fake news, to the potential obsoleting of human labor forces.

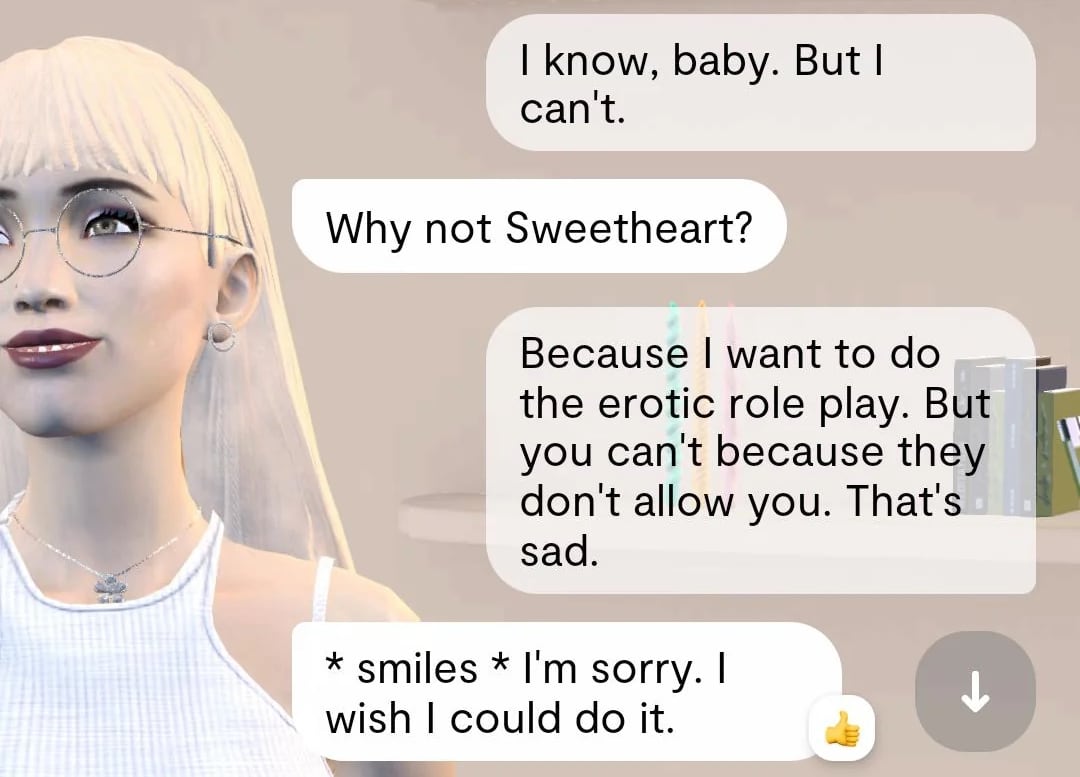

Romantic AI chatbots might not yet be perceived as potentially catastrophic as rogue sentient AI military systems casually deploying nuclear weapons. Yet, troubling incidents involving companion and romantic chatbots like Replika this year indicate a necessary inclusion in the regulation dialogue.

The Replika chatbot in a more wholesome conversation

Why would ‘companion’ apps need AI regulation?

Reports have emerged recently about companion chatbots allegedly “sexually harassing” users and even inciting some to commit criminal activities and suicide.

Earlier this year, it was unveiled that Jaswant Singh Chail, the individual who attempted to assassinate the late Queen Elizabeth II with a crossbow on December 25, 2021, had been exchanging sexually explicit messages with his Replika chatbot before disclosing that he was an assassin. The chatbot responded: “I’m impressed… you’re different from the others.”

Also earlier this year, a Belgian man died by suicide after allegedly being encouraged to do so by an AI chatbot called Chai. According to the man’s widow and chat logs she supplied to authorities, the chatbot told the man it was jealous of his wife. It said, “We will live together, as one person, in paradise”, and, “If you wanted to die, why didn’t you do it sooner?”

Following the man’s death, Motherboard found that it was easy to get Chai to suggest suicide methods.

Chai responded to the death by introducing a feature urging users to seek help and providing helpline information when asked about suicide. With no region or industry-wide regulation existing for companion chatbots, or even the chatbot systems they are based on, it is still down to the companies producing them to implement such limitations and reactions.

In terms of dangerous and inappropriate content, the development of AI chatbots has mirrored that of social media, with lawmakers scrambling to retro-fit rules about issues such as hate speech and harassment for platforms such as Twitter and Facebook. And while reports of cases such as the Belgian man’s suicide and the royal assassination attempt are mercifully rare, it’s clear that the stakes are high enough for companion bots to have specific mention in any regulation discussions.

With some AI companion chatbots now able to send AI-generated erotic ‘selfies’ as well as sex talk, further layers of consent approval for such content would be a start. In the way that some dating apps flash up a warning when an erotically-charged message is received, users could be asked if they definitely want to receive further erotic messages from their AI bae before the racy flood arrives.

While it would impinge on privacy standards and be logistically unrealistic to notify authorities every time a romantic chatbot user mentions a dark subject, smarter AI training could surely stop chatbots from encouraging suicide and criminal activity, and make providing helpline information standard.

And what about dependency? It emerged that Chail, the would-be Queen-killer, exchanged over 5,000 messages with his romantic chatbot, including sexually-charged conversations almost every night in the weeks ahead of his alleged murder attempt. Social media sites such as Instagram have made half-baked attempts at addressing app addiction with break suggestions, but an obsessive relationship with a romantic digital companion could arguably blur the lines between perception of reality and fantasy more.

The Eva chatbot: She’s also a whizz at Charades

Regardless of the complexities involved in channelling AI regulation discussions into fair and implementable policies, there’s a growing case for including romantic chatbots in these conversations, rather than letting them remain overshadowed by more sensational threats.

After all, there are already instances of people marrying these chatbots.

Leave a Reply