Researchers studying images of faces created by artificial intelligence (AI) have called for new guidelines in the face of the potential misuse of the technology to create super-realistic deepfake revenge porn.

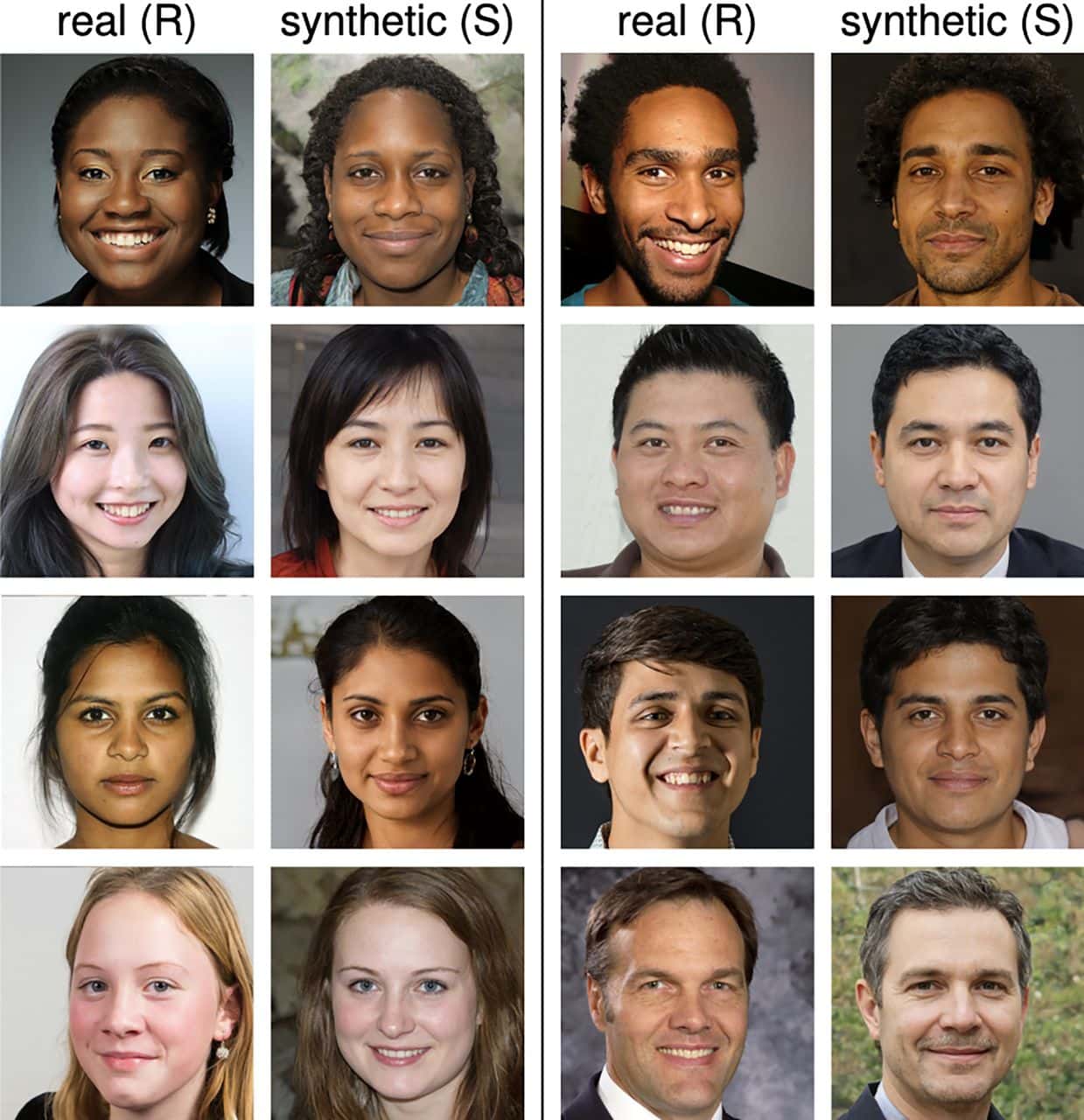

A new report into recognition of AI-generated faces shows that participants couldn’t distinguish between real and fake photographs of faces.

Sophie J Nightingale, psychology lecturer at the UK’s Lancaster University, and Hany Farid, computer science professor at the University of California, called for guidelines in their research paper: AI-Synthesized Faces are Indistinguishable From Real Faces and More Trustworthy.

“At this pivotal moment, and as other scientific and engineering fields have done, we encourage the graphics and vision community to develop guidelines for the creation and distribution of synthetic media technologies that incorporate ethical guidelines for researchers, publishers, and media distributors,”, they wrote, aiming to cut off the misuse of the technology for deepfake revenge porn.

The researchers asked participants to judge whether images of faces they viewed were real human faces, or had been created by an AI system that generated fake face photos. Participants showed 48.2 percent accuracy – slightly less than chance – meaning that they likely found it impossible to identify AI-generated faces.

To create the fake face images, the researchers ran AI programs called GANs (generative adversarial networks), one of which was a network trained on real face images.

The researchers also found that participants rated the AI-generated faces as more trustworthy than the real faces they viewed. “Our evaluation of the photorealism of AI-synthesized faces indicates that synthesis engines have passed through the uncanny valley and are capable of creating faces that are indistinguishable – and more trustworthy – than real faces,” they wrote.

Nightingale told Screenshot that she was concerned that hyper-realistic AI-generated face images could be used to create believable revenge porn content.

“Anyone can create synthetic content without specialized knowledge of Photoshop or CGI… we should be concerned because these synthetic faces are incredibly effective for nefarious purposes, for things like revenge porn or fraud,” she says.

“We should be concerned because these synthetic faces are incredibly effective for nefarious purposes, for things like revenge porn or fraud”

Sophie J Nightingale, psychology lecturer at Lancaster University

The recent rise of deepfake videos – which allows users to place photos of individuals in ‘fake’ videos so it appears as if they were in the original footage – has led to a rise in concerns such as Nightingale’s. Apps claiming to allow users to create deepfake porn videos with ‘one click’ have been released.

In the report, the researchers said that people and companies developing deepfake technology need to take responsibility about how it could be misused.

They wrote that they should “consider whether the associated risks are greater than their benefits. If so, then we discourage the development of technology simply because it is possible. If not, then we encourage the parallel development of reasonable safeguards to help mitigate the inevitable harms from the resulting synthetic media.”

Safety measures suggested by the researchers included incorporating watermarks into image and video synthesis networks, and not releasing code information widely.

They wrote that because it is “the democratization of access to this powerful technology that poses the most significant threat, we also encourage reconsideration of the often laissez-faire approach to the public and unrestricted releasing of code for anyone to incorporate into any application.”

Read Next: Taiwan’s considering prison sentences of up to 7 years for deepfake porn crimes

Leave a Reply