You’d need to have been living under a digital rock to miss the uproar about the DeepNude app launched briefly earlier this year, before being yanked back offline again following the backlash. Well, that’s a genie that isn’t going back in the bottle, as the launch of two new services that use the same technology proves.

If you did happen to miss the previous news, the original DeepNude app allowed people to upload images of a clothed woman, after which the algorithm would superimpose realistic-looking breasts and vulva onto the image. It’s for this reason that DeepNude was referred to as an ‘x-ray vision’ app.

Clearly, this throws up a whole host of potentially problematic scenarios, including the production of revenge porn against someone who has never taken or shared naked images.

Never going away

More problematically, however, is that the original DeepNude software was released online briefly, before being pulled. Obviously, in that time, some people downloaded it – and a few more forked it to produce their own DeepNude based services.

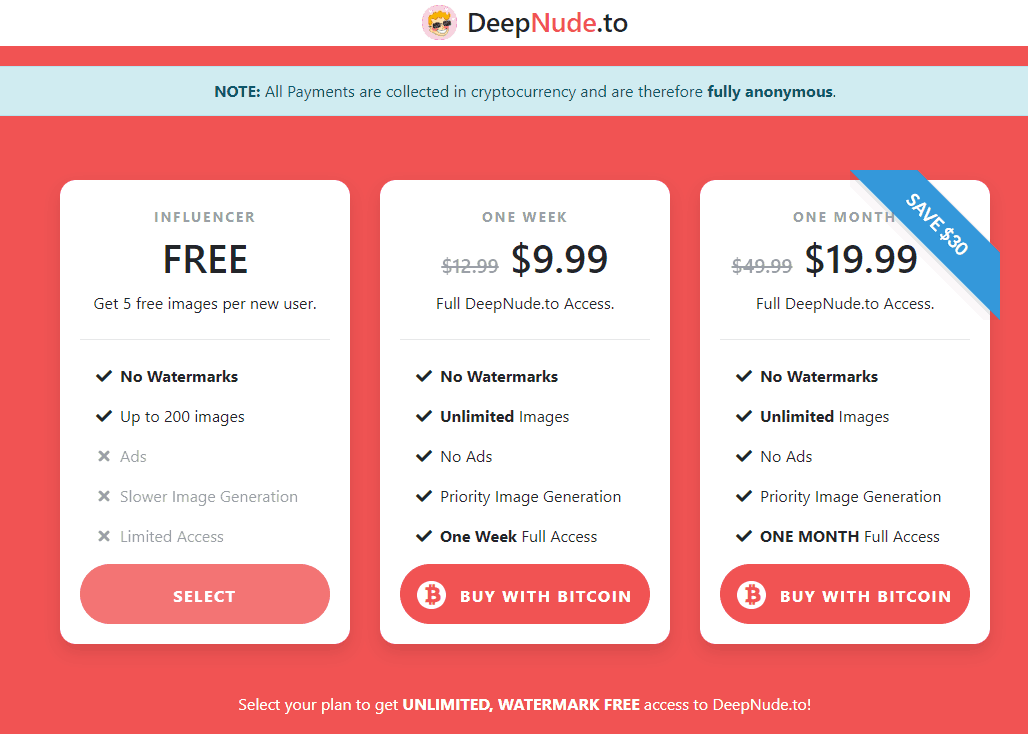

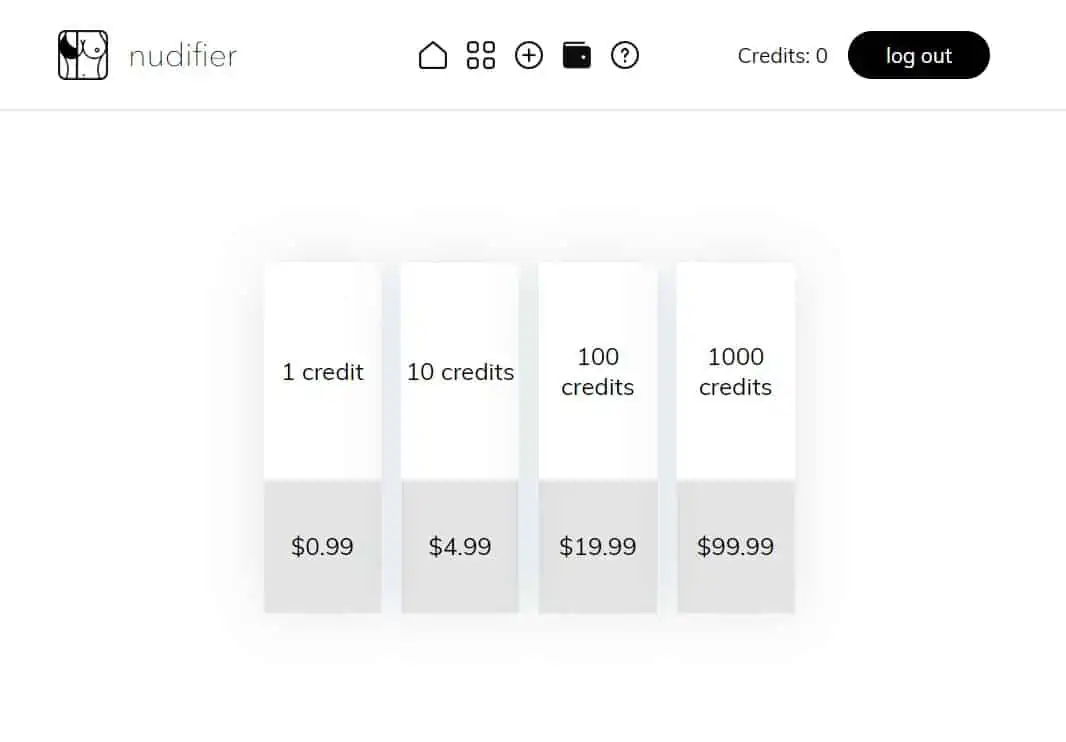

Both of the services uncovered so far – DeepNude.to and NudifierApp – charge users for access to the software, with the former offering 5 free credits, and the latter offering only paid plans, but with wider app support.

The legality of producing these images, however, seems questionable. There are already laws against revenge porn (though these, arguably, aren’t really working), so we got in touch with the anonymous founders behind DeepNude.to and NudifierApp to get their perspective.

“We are not explicitly breaking any existing law. Moreover, we have terms of service that the users have to accept before uploading any images. Besides that, we are embedding visible and invisible watermarks to highlight that this content is fake,” one of the DeepNude.to team told SEXTECHGUIDE. “Plus let’s be honest it is miles away from looking even remotely real. If you want to do damage, you will be using far more sophisticated tools.”

“There are a lot of forks of DeepNude and people who want to use the technology maliciously can do it regardless of our existence. But usually it is still easy to identify whether the nude is created by [a neural] net,” the anonymous founder of the NudifierApp told us.

Indeed, he added that if the technology became accurate enough, it would actually help the problem of revenge porn – an argument that seems a little circular from where we’re sat.

“The quality mostly depends on thoroughness of [the person] using advanced mode. So you can even reach the same result as a professional fake artist in just one minute or less. But look, if deepnudes become indistinguishable from real nudes (which will never happen, of course), it will solve any issues related to revenge porn forever. So we don’t see any ethical problems regardless of deepnude quality. It is just a mass-media panic.”

Libertarian ideals

Both services, if you check them out yourself, are run entirely anonymously, and both are only currently available to people willing to pay in crypto currencies. However, while NudifierApp says that the crypto requirement is for ensuring user privacy, DeepNude.to says there are plans to accept credit card payments.

“We are libertarian in our day to day life. We seek privacy in this world dominated by big data companies that can already predict our behaviour, keep profiles on our taste of food, leisure and even the most intimate desires. We would like to keep the deepnude.to visitors away from this and protect their privacy. We are going to introduce credit card payments quite soon, but to be honest, I am not the biggest fan of credit cards.”

Then, of course, there’s the question about potential restrictions on what users can upload. Giving people unfettered access to creating nude images, at the very least, seems like a really bad idea without filters in place. NudifierApp’s founder, disagrees.

“We wanted to add some kind of filter to pre-check that the image is not a cartoon frame or a male image and that there are not too many clothes… But this turned out to be unnecessary, as our service is paid: people just don’t want to spend money on knowingly awful result. We understand the question was about filters to prevent producing [images of minors] and so on, but we cannot even treat a produced image as porn, as it is just an art created by the artificial artist and nothing more. We do not know and cannot know what is covered by one’s clothing without their straight consent. It is as true as physical laws.”

This argument, of course, somewhat ignores that animated or illustrated material can still be deemed illegal to view.

DeepNude.to, on the other hand, seems to want to have a more proactive role in preventing misuse.

“You can upload anything from a bikini model to your brand-new IKEA furniture… It’s not what you upload, it’s how the AI is trained. Currently, it can process adult human women in swimsuits and underwear with the best success ratio. We are very active in preventing potential abuse and are presently implementing additional monitoring utilities that will help to moderate the content and report violations of the Terms and Conditions,” the team said in a statement.

For whatever it may be worth, DeepNude.to says that there’s no intention to remain in stealth mode forever, and will in time make its team and operations public.

“We are a team of likeminded individuals with very diverse professional backgrounds… Our team is spread over different geographic locations. Currently, as we are exploring, and as it is quite a sensitive subject, we prefer not to be in the limelight. We are not making any significant efforts to hide as well, so with time, we are going to have a company behind it. Content moderation, customer support basically the whole nine yards. Hate it or love it, but we are here to stay.”

The DeepNude tech behind the outrage

Both services say that significant improvements have been made over the originally released DeepNude app, but that they draw from the same core tech.

The original algorithm consisted of three steps, and the automatic modes on both services still use this process:

- First it detects clothing, then estimates positions, shapes and sizes of the body parts

- Then the resulting two images are combined and fed into the last network, which changes the areas that are covered with clothing.

- If there are inconsistencies in the image, the algorithm would attempt to apply heuristics to fix the images (for example, if two breasts and one nipple was detected). This step relied on empirically chosen numbers and thus required a specific size of image: 512×512 pixels.

At its core, it’s based on the Pix2Pix algorithm, which can be used for anything. Both services have a more advanced, manual mode though, which takes the capabilities further.

“In the original version one couldn’t remove only panties or any other specific part of clothing without touching anything else. We managed to overcome these limitations by allowing the user to choose which pieces of clothing they want to remove and what they want to draw instead,” the NudifierApp team said.

Meanwhile at DeepNude.to, the company says “we changed the original code to run on our proprietary platform and improved the performance for our users to get the DeepNude picture delivered almost instantly.” DeepNude.to also says that it’s working on an improved algorithm, which will also work on men as well as women.

DeepNude.to’s spokesperson also said that there is no relation between the two deepnude apps. Regardless, this won’t be the last of them.

Leave a Reply